Shadow AI Explained: Causes, Consequences, and Best Practices for Control

Table of Contents ToggleWhat Is Shadow AI?What Causes Shadow AI?Accessibility of...

Back

Back

Search for Keywords...

Blog

Table of Contents

Updated on February 17, 2026 with new data.

Alphabet CEO Sundar Pichai recently stated that he considers AI to be more profound than fire or electricity. While skeptics may attribute this to a tech leader hyping their product, business leaders across industries agree. McKinsey recently opened a report comparing AI to the steam engine and estimating that the technology holds $4.4T in productivity growth across the economy.

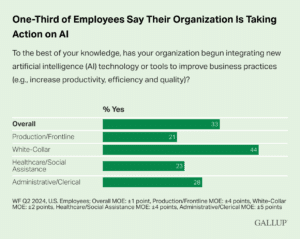

Regardless of what any dissenting business leaders or AI skeptics may think, many workers across the economy are implementing artificial intelligence into their workflows. A recent Gallup poll found that nearly half of all white collar workers admit to using AI for tasks such as generating ideas, automating basic tasks, communicating with coworkers, and learning new things.

Of course, risks and downsides to artificial intelligence also exist. These range from security threats to quality of work issues and ethical concerns. Organizations must be prepared to face novel challenges and solve problems creatively to thrive in the era of generative AI.

The “AI revolution” is both inevitable and game-changing, and business leaders must prepare to seize opportunities while navigating challenges responsibly.

Contrary to popular perception, AI did not enter the workplace with the launch of ChatGPT. It has been embedded in the fabric of business operations for years. AI-driven algorithms have been powering search engines, optimizing logistics, detecting fraud, personalizing ads, and serving many other behind-the-scenes functions for over a decade. While these applications are often invisible and narrowly focused, they do represent a critical and longstanding example of AI tech.

What changed in late 2022 was the way that people interact with AI. OpenAI brought a specific technology known as generative AI to the spotlight. This captured the public imagination and revolutionized the collective perception of artificial intelligence. In contrast to previous highly constrained uses of AI, Gen AI offers a flexible and interactive experience. This has sparked a wave of experimentation and implementation across many industries.

Artificial Intelligence in the workplace is not a new phenomenon. However, the economy is entering a new era in which AI will play an increasingly broad and versatile role in nearly every job.

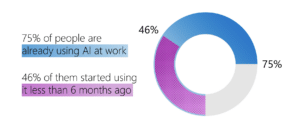

A recent Microsoft report found that 75% of global knowledge workers now use artificial intelligence. This is often self-directed. The same study found that 78% of those who use AI in the workplace are bringing their own tools. While this demonstrates initiative and innovative thinking, it also underscores the lack of a unified vision in many organizations. Worse still, it has the potential to introduce security, ethical, and financial risks through the proliferation of shadow IT.

Now that roughly half of all workers report feeling burned out, the advantages of AI usage are evident: tools like ChatGPT, Claude, and DeepSeek function as digital assistants, drafting messages, analyzing information, and making recommendations. The aforementioned Microsoft study also found that the top 5% of Teams power users save up to one full work day per month through AI summaries alone.

Microsoft categorizes AI users into four broad groups: skeptics, novices, explorers, and power users. The study found that the latter group saves over half an hour per day in rote tasks. Other benefits that these power users report include increased focus and creativity, along with more energy to devote to game-changing projects.

What apps are employees turning to?

Company culture is a significant factor in determining whether employees will become AI power users or remain explorers, novices, or even skeptics. While some people are likely to take action independently and integrate AI into their workflows, most employees follow the lead of company higher-ups. Organizations with an anti-AI culture will inevitably see a far slower and less creative implementation of this type of technology.

Implementing AI into an organization is more like building a puzzle than flipping a light switch. While the decision to use new technologies may seem straightforward, the process of actually implementing them is complex. It involves identifying where AI would be impactful, aligning its usage with the broader organizational strategy, and managing issues such as risk, SaaS spend, legal exposure, employee expectations, and ethics.

Artificial intelligence can assist with many distinct functions. To name just a few potential applications, AI can:

Selecting a few key areas where AI has the highest potential impact. And beginning small can help make the process more manageable for employees, ensuring AI software tools are used to their fullest potential. As teams gain greater familiarity and experience with artificial intelligence, more tools can be added later based on previous insights and strategic priorities.

Leaders must take care to ensure that AI use aligns with core business strategy and vision. For example, while building an AI chatbot as the default customer service medium may save money or even improve wait times on paper, it could conflict with certain brands that pride themselves on offering human-centric experiences. A cybersecurity or supply chain solution built on AI may be a more appropriate starting point for this type of organization.

Every new technology solution brings risk alongside its opportunities. IT leaders realize this—93% of those we surveyed have varying levels of concerns about the security risks associated with AI tools. AI solutions can amplify the software risks inherent to automation and scalability due to a lack of human oversight.

AI systems can’t judge situations on a case-by-case basis. While an employee could make an exception or alert leadership to an unfair quirk in a refund policy, AI could enforce the policy a thousand times before anyone is even aware that an issue exists.

Tactics to combat these types of situations include:

The legal implications of using AI in the workplace are often unclear or still developing. Employers must be proactive to avoid liability. One element of this that has already been the subject of numerous court cases and emerging legislation is the role AI can play in perpetuating discrimination.

Companies that use artificial intelligence to evaluate hiring, for example, risk placing important decisions in the hands of algorithms that may have been trained on biased datasets. Jurisdictions such as New York City and the State of Colorado have already enacted laws requiring such software to be audited for fairness. Lawsuits have also been filed nationwide due to alleged civil rights violations.

Other legal concerns include data privacy, intellectual property, and employee monitoring. Any tool that processes employee data or analyzes their behavior raises questions around consent and surveillance. Finally, companies must also conduct due diligence around their obligations to clients and vendors before delegating work to AI tools.

While laws governing AI use are still evolving, relying solely on legal compliance is risky. Regulations may lag behind the pace of innovation, which is why companies need strong ethical principles at the core of their AI strategies. Clear standards regarding when and how to use AI and what data should remain off-limits are the foundation of a good workplace AI policy. These guidelines will help you avoid legal jeopardy, reputational damage, and unintended harm to employees or customers.

This starts with transparency. People should be aware of when AI is involved and how it affects their decisions. Regular audits are essential for identifying bias in training data or outputs, and human oversight must play a crucial role in high-stakes applications, such as hiring or customer service.

Ultimately, using AI at work ethically involves a lot more than simply avoiding harm. It means using technology to support fairness, trust, and improved outcomes for both individuals and the business.

It’s no secret that skepticism and anxiety regarding AI exist in the modern workforce. A study conducted by Bentley University in conjunction with Gallup found that only 10% of adults in the US believe AI currently does more good than harm.

However, these misgivings coexist with more positive sentiments such as a belief in the transformative power of AI. Moreover, many employees also believe (correctly) that mastering the use of AI at work will be critical to remaining valuable and innovative in their roles.

“AI will not take your job, but someone who knows how to use AI better than you might,” has become a mantra on social media platforms such as LinkedIn. Many employees, especially forward-thinking top performers, expect to learn and implement artificial intelligence into their work. If they feel their company is falling behind, they will consider taking their talent elsewhere.

Unfortunately, employees who want to use AI may take an alternative route when their employers don’t provide their own means. The covert use of artificial intelligence in violation of (or in place of) company policy is referred to as “shadow AI.”

This underscores the point that AI is bringing about profound changes in the economy, regardless of how any individual leader may feel about the situation. Your company will be impacted by AI one way or another. The only question is whether you prefer to build your own structured framework with clear and visible software decisions or let those choices happen without you.

As businesses look for ways to stay competitive, integrating AI offers clear advantages. Artificial intelligence tools are making a measurable impact for organizations that prioritize innovation.

Artificial intelligence can automate routine tasks like data entry, note-taking, and drafting simple messages. This gives employees more time to focus on higher-impact tasks. A study from Accenture found that companies with AI-led operations can double the productive output of their peers.

One area where machines can often outperform humans is pattern recognition. AI can sort through large datasets and identify trends and anomalies with greater speed and precision than any analyst could on their own. From predicting churn to optimizing ad spend and forecasting demand, AI empowers organizations with a previously unimaginable level of insight, provided they are willing to innovate.

Artificial intelligence can also automate or facilitate previously challenging or expensive customer-facing tasks. These include providing 24/7 support or tailoring product recommendations to every customer. Tech-forward thinking enables companies to have their cake and eat it too, enhancing customer satisfaction while simultaneously reducing pressure on service representatives. The previously referenced Accenture study found that many innovation-forward companies are already using generative AI in customer service, clearly indicating where the market is headed.

Generative AI can assist in prototyping, content creation, product design, and ideation. A report from the North Carolina Department of Commerce predicts that this will ultimately allow humans to focus on higher-level conceptual work rather than rote tasks. AI reduces the friction between concept and execution, allowing teams to experiment more frequently and at lower cost. As these tools mature, companies that integrate them early will have a first-mover advantage in reimagining products and services.

Aside from the legal and ethical concerns referenced earlier, there are a few downsides to implementing artificial intelligence into an organization’s workflows. These range from everyday cybersecurity challenges to broader concerns about the future of the global economy.

According to Zylo’s 2026 SaaS Management Index, 43% of IT leaders’ biggest concerns around the use of AI is exposure of sensitive company data followed by regulatory and compliance risks around AI use (33%). AI systems are trained on massive datasets that are consistently updated based on user input. One risk this creates is that employees may inadvertently disclose private data by sharing it with a large language model (LLM). This highlights the necessity of providing robust cybersecurity training across all levels of the organization and establishing clear policies for AI usage.

AI software tools typically bill by both user seats and overall usage, creating opportunities for software waste. For example, employees may sign up for several LLM tools before settling on a favorite, yet continue incurring charges from unused licenses. Redundant tools, over-licensed software, and poor renewal management exacerbate this issue.

Artificial intelligence works best when used to enhance, augment, critique, or build upon human creativity, not replace it. Unfortunately, overreliance on AI technology has become a well-observed phenomenon.

A study from CQ University in Australia reports that over 27% of students demonstrated worse critical thinking skills after implementing large language models. Organizations must take care to ensure that LLMs do not replace critical thought or creativity. Again, clear company policies are a first line of defense here.

We’ve already discussed the potential legal liabilities associated with placing artificial intelligence in the driver’s seat when hiring, promoting, or evaluating talent. Unfortunately, lawsuits are only the tip of the iceberg when it comes to bias-related problems that AI overreliance can invite. Even if your organization never faces legal action, AI bias could still drive suboptimal decisions.

And this goes beyond HR. Artificial intelligence relies on datasets that can be skewed, manipulated, or misinformed in various ways. Once again, human discretion and judgment prove irreplaceable when implementing AI solutions.

While it sounds like the stuff of Science Fiction, concerns about large-scale economic downturns are quickly growing and gaining legitimacy in the face of increasing AI adoption.

Goldman Sachs has even predicted that artificial intelligence could displace as many as 300 million workers in the coming years. Some good news, though: AI is also creating many roles, to the extent that some managers even worry about keeping up with hiring.

The message for workers is clear: AI will disrupt existing workflows while also creating opportunities, and your future success will hinge largely on your ability to adapt to the changing technological landscape.

Considering these changes, knowledge workers should be proactive about building a role for AI in the workplace. Rather than viewing artificial intelligence as a threat, employees who take a thoughtful approach can use these tools to unlock new levels of creativity, productivity, and career growth.

Here are four principles that can guide these efforts:

AI thrives when it’s given a well-defined task. Rather than approaching an LLM with vague commands like “write a report” or “analyze this,” it’s better to think in terms of clear prompts and desired outcomes. Employees should develop the habit of identifying bottlenecks or repetitive tasks in their day-to-day work and then test how AI can streamline or enhance those specific activities. The most successful AI users aren’t the ones who try to use it for everything, but those who apply it where it counts the most.

Automating without review or critique can do more harm than good. Think of AI as a creative partner or smart intern, not a replacement for your judgment. Review outputs carefully, refine prompts over time, and treat AI-generated content as a rough draft or starting point.

Involving your own expertise in the editing, refinement, and decision-making process ensures the final product meets your standards and aligns with company expectations.

As more AI software enters the market, it’s easy to waste time or make mistakes with tools that don’t suit your workflow. Start by mastering one or two tools that align closely with your role, and build from there.

For example, content creators may derive more value from generative text tools like ChatGPT, while analysts may benefit from AI-powered dashboards or summarizers. If your company provides specific platforms, prioritize those. If not, keep track of what you try and what adds value.

Your ethical compass should guide how you use AI, particularly when applied to areas such as hiring, customer service, or legal and financial decisions. Best practices include:

Below are a few potential use cases that illustrate how artificial intelligence can be leveraged to save time or make better decisions.

A new hire at a SaaS company is trying to understand how pricing models have evolved over the past two years. Instead of sifting through 300 Slack threads and dozens of slide decks, she uses an AI-powered knowledge assistant integrated with Notion and Google Drive.

She types, “Why did we change our enterprise pricing last fall?” and the tool summarizes relevant documents, Slack discussions, and financial performance reports into a 3-paragraph answer with links for further reading.

A CEO drafts one-sentence updates he wants to send to different teams: product, marketing, and customer support. Rather than writing each message manually, he pastes the bullets into ChatGPT and asks it to write professional, motivational Slack messages tailored to each department. Within minutes, he has clear, thoughtful communications that reinforce his leadership voice without eating up his afternoon.

An employee in a mid-sized manufacturing firm wants to move into a data analyst role. She asks her company’s AI career coach, “What skills should I build?” and receives a personalized learning path based on the company’s current tech stack, team structure, and internal job openings. The AI links her to internal training videos, suggests free courses from Coursera, and even gives weekly quiz recaps to track her progress.

A project manager oversees six simultaneous client campaigns and struggles with weekly status updates. She sets up a workflow using Zapier and a GPT integration. Every Friday, the AI pulls updates from task descriptions in Asana, summarizes progress, flags potential delays, and drafts a templated email for each client, ready for her to review and send with a click.

At a retail apparel brand, the logistics team uses AI to monitor historical shipping delays, weather forecasts, and warehouse inventory levels. When an East Coast snowstorm is predicted, the AI flags at-risk shipments and suggests alternate routing strategies. It even proposes adjustments to the week’s promotion schedule to alleviate pressure on the impacted fulfillment centers.

A senior marketer wants to know why a recent email campaign underperformed. Instead of waiting on a full analytics report, she copies the raw data from HubSpot into an AI spreadsheet plugin and prompts: “Why did open rates fall compared to last month?” The AI surfaces a few hypotheses (such as subject line length, send time, and audience fatigue) along with supporting charts and recommends A/B testing subject lines next week.

AI isn’t a plug-and-play tool. Successful adoption requires a structured approach, a clear strategy, and a willingness to experiment and refine over time. The companies that reap the most benefits from AI are those that align it with their goals, train their teams, and build systems that support long-term success.

Without clear guidelines, employees may use AI in ways that expose the business to risk. This highlights the importance of good SaaS governance. A company’s usage policy can provide clarity on where AI is encouraged in the workplace, where human oversight is required, and how to handle sensitive information.

Building an effective policy begins with identifying repetitive tasks, common bottlenecks, and data-intensive workflows where AI can significantly impact productivity or precision.

From there, organizations can outline acceptable and unacceptable use cases. Internal documentation, early content drafts, and meeting summaries are good starting points. Conversely, key strategic decisions, legal communication, and anything influencing customer trust should require human oversight and leadership.

Lastly, a strong AI usage policy should also cover security and transparency. Employees should be aware of what can and cannot be shared with AI, and they should receive clear instructions on when and how to cite AI involvement in a project.

The Harvard Business Review reports that over half of employees feel unprepared to use AI in the workplace. Organizations can support their workers by selecting relevant tools and providing ongoing education and support to facilitate a smooth integration. Not only will this ensure that AI users feel comfortable with their work, it can also help avoid software waste and a proliferation of shadow AI.

Selecting tools that integrate with existing solutions, such as Slack, Notion, or HubSpot, is also wise. This can help AI feel like an augmentation of current capabilities rather than an overwhelming technical reset.

Used wisely, AI can help employees spend less time on drudgery and more time on high-impact work. It can support brainstorming, identify patterns in messy datasets, and summarize complex information in seconds. However, these outputs should be treated as raw materials, not finished products. Human judgment remains the filter that transforms AI input into something strategic, thoughtful, or creative.

To help employees stay ahead, invest in upskilling. Offer training in AI literacy and critical thinking. Let your team in on your long-term AI strategy so they understand how the technology will evolve inside the company. By stepping back to consider the needs and feelings of employees throughout departments, organizations can avoid the common pitfall of asymmetrical AI implementation, which is emerging as a common concern.

The Boston Consulting Group reports that 80% of leaders are utilizing AI, while only 20% of frontline employees are doing the same. The solution to this disparity is emotional intelligence (EQ).

Rolling out AI is not a one-and-done effort. Performance needs to be tracked, evaluated, and refined over time. Set clear metrics before launch. Is the tool speeding up turnaround time? Improving decision-making? Reducing costs? These benchmarks will help you determine whether the technology is making a positive difference.

Regular reviews can also uncover new opportunities or unintended downsides. Perhaps the AI excels at summarizing customer calls, but still struggles with tone in outbound emails. Or it’s generating valuable insights, but it needs better data to pull from. Continue iterating until it generates your benchmark ROI.

At the same time, stay vigilant for potential risks. Review outputs for bias. Track how tools are used across departments and be prepared to course-correct if performance dips or reputational concerns arise. Flexibility is key. The organizations that thrive with AI are the ones willing to adapt and evolve as they learn.

As organizations craft AI usage policies, train teams, and monitor outcomes, it’s equally important to keep an eye on what’s ahead. The economy is evolving fast, and the role of artificial intelligence is set to expand in both scope and sophistication. Organizations must think beyond short-term use cases and start preparing for fundamental shifts in the way we operate. Below are several key trends shaping that future.

AI capabilities are rapidly expanding. Gartner predicts that by 2027, 60% of knowledge workers will utilize generative AI daily. This shift won’t just come from increased adoption of today’s tools—it will be fueled by significant leaps in what AI can actually achieve.

Expect faster and more accurate language models, more integrated multimodal systems (combining text, images, and voice), and greater domain-specific customization. For example, legal, medical, and engineering teams may soon have access to specialized AI assistants trained on industry-specific knowledge and standards.

At the same time, AI will move further into the background. Rather than interacting with stand-alone platforms, employees will increasingly engage with embedded AI across email clients, project management dashboards, sales tools, and even video calls, making AI a seamless part of everyday workflows.

As capabilities expand, so too will the scrutiny. Fortunately, 81.8% of IT leaders reported already having documented policies specifically governing the use of AI tools. The Gartner report cited above also predicts that over 50% of government agencies will establish formal AI risk and ethics frameworks by 2026. Businesses that adopt AI without preparing for these frameworks risk falling behind legally and financially.

In the near future, expect to see:

Forward-thinking companies won’t just aim to comply: they’ll build trust. Public opinion is increasingly focused on “explainable AI” and the ethical implications of automation. Building policies that emphasize fairness, human oversight, and transparency will be essential for long-term success.

As AI takes over more repetitive and rules-based tasks in the workplace, the human role will shift toward creativity, strategy, emotional intelligence, and high-stakes decision-making. Concurrently, 77.6% of the IT leaders we surveyed reported upgrading or investing more in SaaS apps for their AI capabilities over the past year.

New job titles and functions will emerge, such as AI trainer, prompt engineer, or LLM auditor. Cross-training and reskilling programs will become essential to employee retention. Performance metrics will evolve to account for results and AI-enhanced output, rather than raw effort.

Zylo helps organizations take control of their AI journey by delivering clarity, visibility, and governance across SaaS solutions. Our tools help companies identify shadow AI, eliminate redundant licenses, and ensure that every tool aligns with strategic priorities and security standards.

If you’re an IT leader looking to drive efficiency, save money, and reduce risk, and you found this article helpful, learn more about Zylo to see if we fit your needs.

AI in the workplace means using artificial intelligence—like machine learning, NLP, computer vision—to automate tasks, analyze data, and improve decision-making. It enhances productivity and frees employees for more strategic work.

AI boosts efficiency by automating repetitive tasks, delivering real-time insights, improving customer service with chatbots, and enabling smarter hiring and talent management. It helps companies save time and resources while driving innovation.

Top use cases include:

HR & recruiting: resume screening and candidate matching

Customer support: AI-powered chatbots and sentiment analysis

Data analytics: real-time trend detection and forecasting

Process automation: invoice processing, meeting summaries

Personalized training: adaptive learning based on employee needs

Rather than replace jobs, AI typically augments human roles by handling repetitive or data-heavy tasks. Human oversight remains critical—especially for strategy, creativity, and emotional intelligence. Studies show AI often redeploys staff to higher-value roles.

Responsible AI integration requires:

Ethical governance: transparency, accountability, bias mitigation

Employee training: upskilling staff to work alongside AI

Privacy safeguards: secure handling of employee and customer data

Human-in-the-loop systems: combining AI scale with human judgement

Key challenges include data quality and integration, bias in algorithms, lack of skilled talent, and cultural resistance. Addressing these involves strong data infrastructures, diverse teams, governance policies, and change management initiatives.

Zylo’s SaaS Management Platform supports AI-driven decision-making by providing visibility across your software stack, optimizing usage through AI-based analytics, and ensuring compliance and cost control—empowering IT teams to leverage AI strategically.

Return on investment depends on use case—but many businesses report 20%–40% efficiency gains. Savings come from reduced manual labor, fewer errors, faster cycle times, and improved customer experiences.

Start by defining goals (e.g., streamline onboarding, improve reporting). Then look for AI tools that integrate smoothly with existing systems, offer clear accuracy metrics, support customization, comply with security/regulation standards, and provide transparent vendor support.

Explore Zylo’s blog series on AI adoption, case studies from leading companies, and third-party research from Gartner, McKinsey, and Deloitte. We also provide webinars and whitepapers on AI ethics, ROI, and best practices.

Table of Contents ToggleWhat Is Shadow AI?What Causes Shadow AI?Accessibility of...

Table of Contents ToggleDefining AI in the WorkplaceThe Rise of AI...

Table of Contents ToggleWhat Is AI in SaaS?Why AI Matters in...

Table of Contents Toggle3 Key AI Risks for SaaS ManagementRisk #1:...

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |