Table of Contents

Updated on February 19, 2026 with new stats.

AI tools are reshaping how companies operate—but not without introducing new security blind spots. As more organizations adopt AI-native applications, security leaders grapple with complex risks that don’t follow the same patterns as traditional SaaS vulnerabilities.

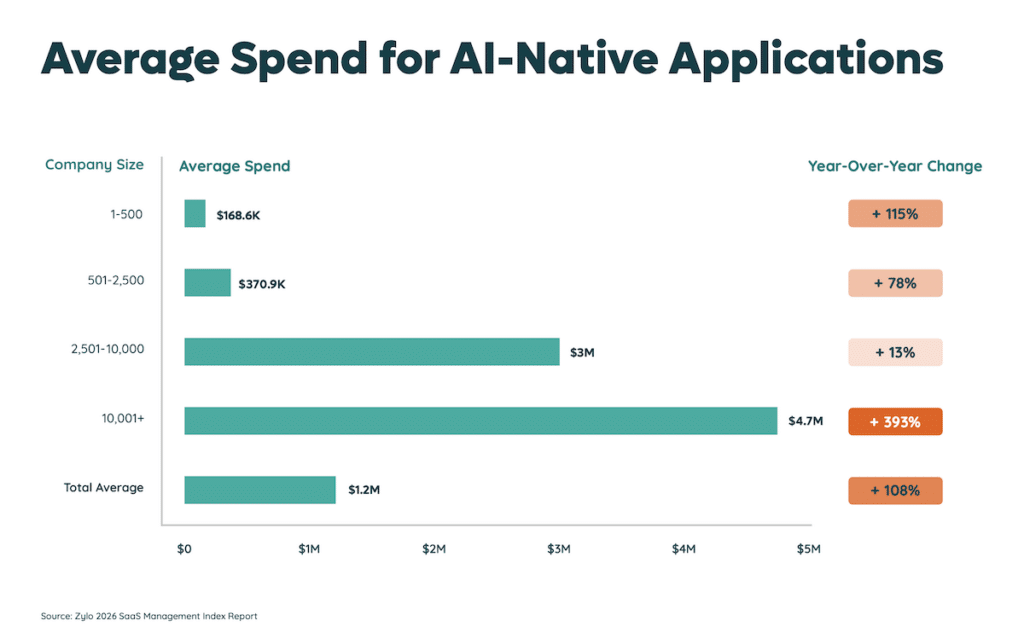

In 2025 alone, spending on AI-native apps rose 108%, averaging $1.2M annually per organization. For large enterprises, that figure soars to 393%, averaging $4.7M annually per organization. With widespread adoption of tools like ChatGPT, Grammarly, OpenAI API, and Otter.ai, the stakes have never been higher. McKinsey reports that half of all companies now use AI across at least two business functions—up from less than a third in 2023. And with 93% of IT leaders expressing varying levels of concern about company data exposure through AI tools, the conversation is shifting from curiosity to urgency.

This article breaks down the lesser-known AI data security risks—and how IT and security teams can stay ahead using smarter SaaS governance and spend management strategies.

What Is AI Data Security?

AI data security refers to the strategies, policies, and technologies that protect data used, generated, or processed by artificial intelligence systems. Unlike traditional SaaS apps, AI tools often handle vast volumes of proprietary, unstructured, or dynamic data, raising new questions about compliance, transparency, and operational risk.

As organizations invest more heavily in AI-powered apps, the need for visibility and control grows. Zylo’s 2025 SaaS Management Index reveals that 77% of IT leaders discovered AI-powered features or applications operating without IT’s awareness. With nearly half of the apps in the average portfolio receiving a “Poor” or “Low” risk score, these gaps put sensitive data and company operations at risk.

Why AI Data Security Matters

The rise of AI isn’t just a tech trend—it’s a security imperative. Below are key reasons companies must rethink their approach to securing AI systems.

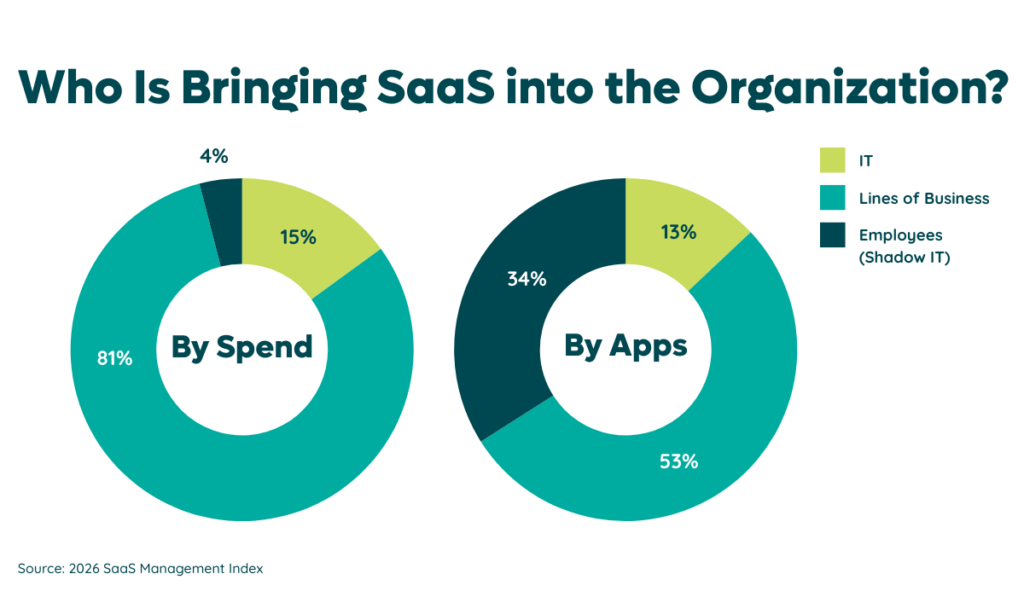

Safeguarding Proprietary and Personal Data

AI models rely heavily on data ingestion, often blending personal information, sensitive IP, or business-critical insights. Even a simple prompt could expose confidential material without proper safeguards, especially if employees use unmanaged apps outside IT’s visibility. Zylo research shows 87% of SaaS apps are purchased outside IT, further escalating the risk of exposure.

Preserving Model Accuracy and Reliability

If an AI model is fed incorrect or malicious data, its performance can degrade, leading to inaccurate outputs or flawed decisions. Ensuring data quality and input controls isn’t just about performance. It’s also about preventing downstream security failures that result from skewed or corrupted models.

Controlling How Models Are Used

Misuse of AI tools—either intentionally or unintentionally—can create reputational or legal risks. Misuse often stems from poor guardrails, from unauthorized data generation to harmful content creation. SaaS governance strategies with a solution for risk mitigation are essential for identifying gaps and enforcing proper usage.

Building Trust in AI Systems

Without transparency and data accountability, AI tools are difficult to trust, especially for end users and leadership. Ensuring AI systems operate ethically and securely boosts adoption while reducing resistance across teams.

Meeting Regulatory and Legal Requirements

As regulations like the EU AI Act and sector-specific rules emerge, compliance is becoming non-negotiable. AI systems that collect or process personal data must follow strict governance policies. Companies with decentralized SaaS ownership may miss these requirements unless they adopt organization-wide oversight tools like SaaS inventory management.

Securing Predictive Insights at Scale

AI systems often use historical data to generate predictions. Predictions can be misleading or even dangerous if the source data is flawed. Worse, attackers can manipulate training inputs to alter predictive behaviors. AI security must account for the entire lifecycle of data, not just final outputs.

Addressing Lack of Model Transparency

Many AI models are “black boxes,” meaning their logic and decision-making pathways are not easily understood or explained. This fact makes it difficult for IT and compliance teams to audit decisions or identify irregularities. Increasing visibility into app behavior is key to reducing hidden security gaps. Learn more in our guide on improving security posture with SaaS Management.

Mitigating Risk of Data Tampering

AI tools may ingest data from multiple sources—some of which can be manipulated. Adversarial attacks, for example, can poison training data in ways that subtly alter model behavior. Without strong validation processes and SaaS-level controls, companies may not even know this manipulation is occurring.

Monitoring Internal Misuse

Even well-meaning employees can pose risks by feeding proprietary data into public AI tools. Worse, insider threats may involve intentional data exfiltration via generative tools. Centralized oversight and eliminating shadow IT can significantly reduce these risks.

Responding to Real-Time Breaches

AI-native apps expand the potential attack surface. If an app is compromised or an integration leaks sensitive data, detection and response must be immediate. This is especially difficult when many tools fall outside traditional security controls. Layering SaaS spend and governance creates a more complete view of all tools, including unauthorized ones.

Evolving Your SaaS Governance Framework for the Digital Workplace

Learn MoreEthical Implications of AI in Enterprise Settings

As AI tools become more embedded in day-to-day operations, ethical questions around how data is used, interpreted, and protected rise to the forefront. For organizations scaling AI adoption, navigating these implications is as vital as managing risk.

Bias in Training Data and Decision-Making

AI models are only as objective as the data used to train them. When training data reflects societal biases or historical inequalities, AI outputs can amplify discrimination, particularly in hiring, lending, or healthcare. Without transparency into model training or active mitigation strategies, companies risk deploying tools that unintentionally treat users unequally. IT leaders must ensure AI tools support inclusive, equitable outcomes—and regularly audit outputs to detect and correct for bias.

Ownership and Use of Generated Content

One of the murkiest areas in AI security is intellectual property. When an AI tool generates code, images, or written content, who owns the result? If proprietary data became part of the training set, there may be legal conflicts over usage rights. Businesses need clear policies around input ownership, licensing, and acceptable outputs, especially in SaaS environments where AI-native tools are adopted without legal review.

Transparency and User Consent

Many generative AI tools collect and store user prompts, sometimes using them to further train their models. If employees aren’t aware that data is being stored—or if they input sensitive information—this creates a major compliance gap.

Users must be informed about how their data is collected and used, especially when AI systems operate as third-party vendors. Implementing centralized visibility tools like SaaS governance helps ensure these practices align with internal and external standards.

Regulatory Pressure and Compliance Gaps in AI

As AI tools become more sophisticated, they’re also drawing more scrutiny from regulators. From data privacy to algorithmic accountability, emerging rules force organizations to rethink how AI fits within existing compliance frameworks. For companies with decentralized SaaS portfolios, managing AI-related compliance adds another layer of complexity.

GDPR and AI-Based Systems

Under the General Data Protection Regulation (GDPR), any use of AI that involves personal data must comply with strict data protection principles—including transparency, consent, and the right to explanation. Automated decision making that significantly affects individuals is especially risky, as GDPR requires human oversight in such cases. Businesses using AI-native SaaS apps must verify that these tools offer compliant data handling and opt-out capabilities.

CCPA and AI Use in SaaS Environments

The California Consumer Privacy Act (CCPA) gives consumers the right to know what data is collected, request deletion, and opt out of sale. When AI tools process or store personal data—especially across third-party SaaS platforms—these rights must be upheld. With most SaaS spending controlled outside of IT, organizations risk violating CCPA simply by not knowing which apps are in use. Tools like SaaS inventory management helps ensure data flow is transparent and traceable.

AI Risk Management Frameworks

The U.S. National Institute of Standards and Technology (NIST) released its AI Risk Management Framework (AI RMF) to help organizations address security, privacy, and fairness in AI systems. Though voluntary, it’s quickly becoming a benchmark for responsible AI use. IT and compliance teams can use it to guide internal AI audits and vendor evaluations—especially in enterprise SaaS settings.

Sector-Specific Compliance Expectations

Industries like finance, healthcare, and government face even stricter compliance requirements when adopting AI. For example, HIPAA limits how patient data can be used in AI tools, while the SEC is considering AI-specific guidance for financial institutions. SaaS vendors serving these sectors must clearly document their AI functionality and data protection measures—or risk disqualification during procurement.

Ongoing Challenges in AI Data Security

Even as adoption grows, most organizations are still playing catch-up when protecting data in AI environments. Below are some of the most pressing concerns that security and IT leaders are navigating today.

The Rise of Shadow AI

As shadow IT became a risk in the early SaaS boom, shadow AI is creating similar exposure with AI tools being used outside IT’s knowledge or control. Employees may input proprietary data into free tools like ChatGPT or use unmanaged AI plug-ins embedded in existing apps. This type of decentralized usage bypasses governance and increases risk. Eliminating shadow IT is a critical first step toward identifying unknown AI activity.

Data Breaches Linked to AI-Enabled Tools

Many AI tools are designed to collect user inputs to improve model training. If not properly secured, these logs can become a target. Publicized breaches involving chatbots and transcription tools show how easily sensitive data can leak if stored improperly. This risk is even more pronounced in apps with dynamic user interaction, where logs may contain names, client records, or financial details.

Lack of Access Controls and Role-Based Restrictions

AI-native applications don’t always integrate smoothly with centralized identity providers or follow standard access control models. This can lead to unauthorized internal access, especially when users can create, modify, or extract data without oversight. Enhancing control through SaaS governance solutions helps apply consistent role-based access policies across AI tools.

Flawed Outputs Due to Model Bias or Data Gaps

AI systems rely on complex statistical models that can produce biased, incomplete, or inaccurate results if trained on flawed data. These outputs may not be obviously wrong at first glance, but they can erode trust or introduce poor decision-making. For example, a biased recommendation model could prioritize the wrong candidates, vendors, or security events. IT leaders must continuously test AI performance and correct drift as models evolve.

User Privacy at Risk

AI tools often operate in gray areas regarding user consent and data visibility. When sensitive inputs are processed without disclosure or logged without encryption, privacy violations can occur—even unintentionally. As regulations evolve, tools that lack clear privacy documentation may fall out of compliance. Organizations should prioritize tools that include secure opt-in policies and limit retention of user-generated content.

Top AI Data Risks, Threats, and Privacy Concerns

AI-powered tools come with powerful capabilities and introduce sophisticated threats that most traditional SaaS security frameworks weren’t built to handle. These emerging risks make visibility, governance, and proactive mitigation more critical than ever.

Data Leakage Through Prompt Retention

Many AI tools store user inputs to retrain models or improve product features. If employees input sensitive or regulated data, those prompts could be retained—and potentially exposed. Without policies in place, data leakage may go undetected. Learn how AI risks can emerge quietly across decentralized SaaS stacks.

Data Poisoning in Model Training

Adversaries can tamper with training data—subtly inserting malicious examples that skew a model’s behavior. These poisoned datasets may degrade accuracy or trigger undesirable outcomes when specific inputs are provided. Since many organizations now fine-tune third-party models, SaaS governance solutions are key to tracking where data flows and who controls it.

Manipulation Through Adversarial Inputs

Small, precisely engineered input changes—such as wording in a prompt or pixel patterns in an image—can trick models into returning harmful, misleading, or manipulated outputs. These adversarial prompts are hard to detect and often bypass basic monitoring. The use of centralized inventory tools can help surface under-the-radar AI tools that are at higher risk of misuse.

AI-Powered Threat Acceleration

Cybercriminals now use AI to create more personalized, scalable, and evasive attacks. From deepfake-enabled phishing to automated social engineering, threat actors are using the same tools enterprises deploy—just for the opposite purpose. Adopting AI-aware SaaS security practices is critical for leveling the field.

Model Inversion and Data Reconstruction

Model inversion attacks allow malicious actors to infer or reconstruct inputs—like images, customer data, or IP—by reverse engineering the model’s outputs. These risks are amplified when vendors don’t disclose how data is stored, or when models are integrated across multiple apps. SaaS governance ensures that sensitive model data doesn’t slip through fragmented oversight.

Membership Inference and Training Data Exposure

Attackers can sometimes determine whether specific data records were used to train a model. This creates compliance risks if personally identifiable information (PII) is involved. Without tools that surface unauthorized AI app usage, detecting this kind of exposure is difficult. Shadow IT only makes the problem worse.

Vulnerabilities in the SaaS Supply Chain

AI tools often rely on third-party APIs, pre-trained models, and open-source libraries. Any of these components can introduce malware or allow unauthorized access if not vetted properly. A spend management system can help trace AI spend across vendors and identify where deeper due diligence is needed.

Mishandling of Personally Identifiable Information (PII)

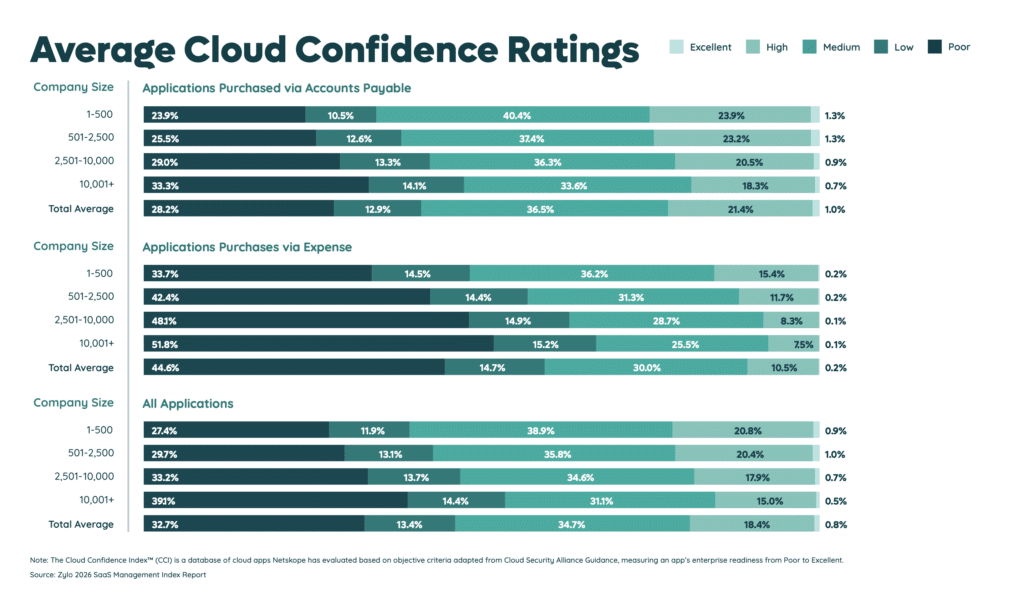

Many AI applications collect or process names, messages, locations, or internal communications—sometimes without encrypting or anonymizing them. If tools retain this data for training, it may be repurposed or leaked. The 2025 SaaS Management Index found that 46% of SaaS apps in a typical portfolio carry a “Poor” or “Low” risk score, showing just how widespread risky apps proliferate.

Unsecured AI APIs and Integrations

APIs expose powerful model capabilities to users and systems, often without proper authentication or monitoring. Poorly secured APIs are targets for scraping, brute-force prompts, or lateral access across applications. Strong governance and risk mitigation are needed to monitor access and apply rate limiting or audit logs across AI-enabled APIs.

Model Drift and Silent Accuracy Decay

AI models don’t stay accurate forever. Over time, changing data, evolving use cases, or malicious interference can cause models to produce inaccurate or unsafe outputs. This decay often goes unnoticed until performance issues arise. Enterprises managing multiple AI-powered apps should implement consistent visibility and testing protocols across tools.

Surveillance Overreach from Embedded AI Features

Some SaaS platforms now include embedded AI that tracks user behavior, logs screens, or captures voice commands—often for automation or productivity. While useful, these features may violate internal privacy policies or regional regulations. Enterprise-wide inventory tracking helps flag tools with overreaching functionality.

Risks Introduced by Transfer Learning

Transfer learning allows teams to fine-tune a general-purpose model with proprietary data. However, inherited flaws from the base model—such as bias or poor security controls—can quietly carry over. Organizations need frameworks for vetting reused models and a reliable system of record for AI usage.

Malicious Backdoors in Deployed Models

In some AI systems, backdoors are embedded intentionally during training and are triggered by specific inputs to produce harmful behavior. These are difficult to detect and nearly impossible to audit without transparency from the vendor. Companies using AI across departments must track which models are deployed and how they were sourced, relying on central governance to manage policy enforcement.

How to Strengthen AI Data Security Across the Enterprise

AI security requires more than patching vulnerabilities—it takes proactive planning, shared accountability, and continuous oversight. Below are eleven actionable strategies for building an enterprise-ready AI security posture across a decentralized SaaS environment.

1. Promote Collaboration Between IT, Security, and Business Units

AI adoption often begins outside IT—but governance cannot. And it takes a partnership between IT, Security, and business units to be successful.

These teams must work together to assess risk, manage access, and build policies around AI use. Encouraging shared ownership ensures innovation doesn’t outpace protection. A centralized system of record for cloud tools enables alignment across departments.

2. Train Teams on Safe and Ethical AI Use

Awareness is one of the best defenses against misuse. Employees need practical training on identifying insecure AI tools, avoiding uploading sensitive data, and understanding the implications of public versus private AI platforms. Ongoing education also supports stronger security postures organization wide.

3. Conduct Frequent SaaS Portfolio Audits

Understanding where AI exists across your tech stack is key to managing risk. Regular audits help uncover unauthorized tools, excessive permissions, and data flow concerns. Organizations leveraging SaaS inventory management can identify exposure before it becomes a breach.

4. Standardize Evaluation and Approval of AI Vendors

As adoption scales, inconsistency becomes a liability. Establishing clear standards for vendor evaluation—including transparency, encryption practices, and model behavior—allows for scalable governance. This is especially important when the majority of SaaS apps are purchased outside IT, as reported in Zylo’s 2026 SaaS Management Index.

5. Enforce Access Limits and Application Scopes

Overly broad permissions increase the surface area for attack. Set boundaries around what AI tools can access, and restrict data types based on user roles. These limits should also extend to time-based access, reducing long-term exposure.

6. Embed Privacy Controls into Design and Deployment

Build privacy protections into AI implementation from day one. That means enforcing opt-in data collection, pseudonymization, and consent workflows before tools go live. Embedding these practices ensures alignment with GDPR, CCPA, and evolving AI-specific regulations.

7. Require Clear AI Vendor Documentation

Many AI-native tools lack transparency around data handling, model updates, and security controls. Vendors must provide documentation as part of procurement, including how data is processed and stored, and whether user inputs are retained or used for retraining. Tools without this transparency should be flagged for further review using governance controls.

8. Allow Only Pre-approved Models and Frameworks

To reduce risk, organizations should whitelist only those AI tools and model architectures that meet internal standards. These decisions can be based on factors like explainability, auditability, and data usage policies. Anything not on the list should be blocked until validated.

9. Use AI-Aware Anomaly Detection Tools

AI threats often bypass traditional detection systems. Invest in tools designed to flag irregular model behavior, unauthorized API use, or suspicious inputs. Combining AI-based threat detection with SaaS governance closes blind spots across the app ecosystem.

10. Perform Gap Analysis and Policy Refreshes

The threat landscape is evolving fast. Organizations should perform recurring gap analyses to test whether their current AI security policies are still effective. These findings should inform policy updates and help prioritize SaaS usage rules, procurement, or staff training changes.

11. Engage Outside Experts for Emerging AI Risks

As AI use cases diversify, external expertise may be required to assess model behavior, evaluate black-box tools, or guide compliance. External consultants can also pressure test internal assumptions and introduce emerging best practices not yet codified in law.

How AI Is Enhancing Cybersecurity and Data Protection

AI is not just a security risk—it’s also a tool for defense. When used strategically, AI can help organizations detect threats faster, reduce incident response times, and make SaaS environments more secure. Here’s how AI is actively reshaping cybersecurity in the enterprise.

Threat Detection in Complex Environments

AI enables faster and more accurate identification of unusual behavior, system anomalies, or known attack patterns across SaaS platforms. By learning from historical data, AI models can flag new threats even when traditional rules-based systems miss them. Organizations using centralized SaaS visibility benefit from AI-powered monitoring at scale.

Enhancing Network Visibility and Protection

AI can analyze traffic patterns and detect irregular flows that may signal data exfiltration, unauthorized API use, or malware communication. This kind of deep network analysis helps secure both cloud-based and hybrid infrastructures. Pairing it with SaaS governance frameworks ensures threats are not missed in third-party integrations.

Strengthening Endpoint Protection

AI tools help identify risks at the device level, such as malware, shadow apps, or unexpected behavioral changes in software. Because endpoints are often where AI tools are accessed (e.g., browser extensions or unmanaged downloads), continuous monitoring can prevent lateral movement or internal compromise.

Automating Security Responses at Scale

AI-driven security platforms can automatically isolate compromised accounts, revoke suspicious tokens, or initiate remediation steps—cutting response times from hours to seconds. This is especially important in SaaS portfolios where hundreds of apps may be running concurrently. Governance tools that integrate with AI security platforms enable faster, policy-aligned action.

Optimizing Vulnerability Management

AI helps security teams prioritize patching and remediation by predicting which vulnerabilities are most likely to be exploited. Instead of chasing every alert, teams can focus on high-impact risks. This triage process reduces alert fatigue and supports a more focused approach to security operations.

Preventing Phishing and Social Engineering

Generative AI is already being used to craft more convincing phishing emails—but it can also be used to stop them. Security tools now use AI to evaluate the tone, content, and context of incoming messages to flag potential fraud. Embedding these tools across cloud applications helps protect end users in real time.

Streamlining Compliance and Audit Reporting

AI simplifies the tracking and documenting user behavior, data access, and incident timelines—critical for proving compliance during audits. Automated reporting also helps reduce manual effort for IT and legal teams. Coupling AI with SaaS spend and usage intelligence provides the data foundation for accurate reports..

The Future of AI and Data Security

As organizations become more reliant on AI-driven tools, security practices must evolve just as fast. Emerging technologies are already reshaping how enterprises protect sensitive data, manage risk, and respond to threats across sprawling SaaS ecosystems.

Advancements in Encryption Technology

Next-gen encryption methods—like homomorphic encryption and post-quantum cryptography—are being developed to secure data even while it’s being processed. These advancements may allow AI models to operate on encrypted datasets without exposing the underlying content. Organizations should evaluate which AI tools are keeping pace with these innovations, especially as compliance demands increase.

AI’s Role in Advanced Security Analytics

AI will play an increasingly central role in helping companies analyze massive amounts of telemetry and behavioral data across SaaS applications. By identifying subtle shifts in user activity or app behavior, AI-driven analytics will improve early warning systems and reduce false positives. Companies investing in SaaS visibility and monitoring will be better positioned to exploit this shift.

Blockchain as a Security Layer for AI Integrity

Blockchain technology offers potential for improving AI auditability, data lineage tracking, and model validation. Distributed ledgers can provide tamper-proof logs of data usage and model changes, ensuring transparency and trust. While early-stage, this technology may soon help enterprises verify that third-party AI apps meet strict data handling and access standards.

Privacy-Preserving Technologies and Synthetic Data

Privacy-preserving methods such as federated learning, differential privacy, and synthetic data generation are gaining traction as companies try to balance personalization with protection. These approaches limit the exposure of real user data while still supporting AI performance, helping companies stay aligned with emerging global regulations.

Greater Focus on AI-Specific Compliance Standards

As governments finalize AI-related legislation—like the EU AI Act and proposed U.S. frameworks—expect to see more pressure on vendors and enterprises to document model behavior, risk scoring, and user impact. Companies that adopt centralized governance and policy enforcement tools will now have a head start.

Align Your AI Strategy with SaaS Governance That Scales

AI data security isn’t just a technical problem—it’s a strategic imperative. As organizations embrace AI tools across every department, the need for centralized oversight, policy enforcement, and visibility is growing fast. Zylo gives enterprises the control they need to manage AI usage across the full SaaS portfolio—eliminating shadow AI, improving risk posture, and unlocking smarter decisions.

Get ahead of emerging AI threats with a SaaS Management strategy built for the future. Explore Zylo’s AI-aware governance solutions today.