3 AI Risks Hidden in Plain Sight

Table of Contents Toggle3 Key AI Risks for SaaS ManagementRisk #1:...

Back

Back

Search for Keywords...

Blog

Table of Contents

Updated on February 19, 2026 with new stats.

Artificial intelligence adoption is accelerating across industries, with organizations embedding AI into critical business functions at an unprecedented pace. According to a McKinsey Global Survey on AI, half of respondents say their organizations have adopted AI in two or more business functions, a sharp increase from less than a third in 2023. This rapid expansion extends to SaaS investments, with spending on AI-native apps rose 108% year over year.

However, as AI adoption grows, so does the risk of shadow AI—using artificial intelligence tools and models without IT oversight. Just as shadow IT introduced unmanaged software risks, shadow AI presents new security, compliance, and governance challenges that organizations must address. Companies face potential data leaks, regulatory breaches, and uncontrolled AI-driven decision-making without visibility into AI usage.

Shadow AI refers to the unauthorized use of artificial intelligence tools within an organization, often bypassing IT oversight and security protocols. Similar to shadow IT, where employees introduce unapproved software, shadow AI emerges when teams deploy AI models, chatbots, or automation tools without visibility from IT or compliance teams.

While traditional software stores information, AI models can access it in new and different ways. Sensitive information could become part of the model’s training data, taking it from inside the company to the public domain on a broad scale. As AI processes sensitive company data, it can also generate decisions that impact operations, and even introduce compliance risks.

Without proper monitoring, organizations may unknowingly expose proprietary or customer information, create biased AI outputs, or breach industry regulations. As AI capabilities continue to expand, managing shadow AI becomes a necessary part of IT governance to mitigate risks while still enabling innovation.

The rapid adoption of AI tools is driven by a variety of factors, many of which are outside the direct control of IT teams. From easy access to AI-powered applications to decentralized purchasing, organizations must navigate a complex landscape to maintain visibility and control.

AI tools are more available than ever, with many offering free or low-cost access. Employees can now integrate AI models into their workflows with minimal effort, whether through standalone platforms or embedded features in existing software. Open-source AI models, such as large language models (LLMs) and image-generation tools, further lower the barrier to entry, allowing business users to experiment without IT oversight.

Rather than centralized IT teams, individual business units increasingly control enterprise software spending. Employees and lines of business are responsible for 87% of applications and 85% of SaaS spending. This decentralized approach makes it difficult to enforce AI governance, as purchasing decisions are made without consistent oversight or standardization.

SaaS providers are integrating AI into existing platforms, often without requiring separate purchases or approvals.

Collaboration tools like Microsoft Teams, CRM platforms such as Salesforce, and business intelligence software like Tableau now include AI-powered automation, recommendations, and predictive analytics. For example, Salesforce Einstein enhances CRM workflows with AI-driven insights, while Microsoft Copilot brings generative AI into workplace applications.

As a result, companies may be using AI without realizing the extent of their exposure, making it difficult for IT teams to track and assess AI-related risks.

Without clear governance models, AI tools enter organizations without security assessments, compliance checks, or purchasing approvals. Many enterprises lack formal policies for AI use, creating inconsistencies in how different teams adopt and manage AI solutions. In security and procurement reviews, AI functionality may not be flagged or assessed, leading to uncontrolled adoption across multiple business functions.

Organizations are under pressure to improve efficiency, and AI presents a compelling solution. Employees use AI to automate repetitive tasks, generate content, or analyze large datasets without waiting for IT to vet and approve tools. This speed-first mindset can result in shadow AI deployments that bypass risk assessments, increasing the likelihood of data security vulnerabilities and compliance violations.

Many employees lack the training to use AI responsibly, leading to unintentional risks. Without proper guidance, employees may upload sensitive data into AI models, trust AI-generated outputs without verification, or fail to recognize security risks associated with AI-generated content. A lack of AI literacy across departments contributes to the uncontrolled spread of Shadow AI.

AI models often promise high-value insights but may not align with actual business needs. As a result, employees may experiment without IT support.

Business and employees alike are growing more enthusiastic about using AI. However, employees may introduce AI tools that serve individual needs rather than company-wide goals. This can create fragmented and unaligned AI adoption.

Casual users who intermittently engage with AI tools may overlook security best practices, increasing the risk of data leaks or compliance violations.

Shadow AI and shadow IT both involve technology that operates outside IT’s direct oversight, but their risks and impacts differ. Shadow IT refers to unauthorized applications or software used by employees, while shadow AI specifically involves artificial intelligence tools and models that function without governance.

Unlike shadow IT, which is largely about unapproved software adoption, shadow AI introduces new concerns related to data privacy, automated decision making, and AI-generated risks. AI tools can process sensitive company data, make predictions, and even automate workflows—all without IT visibility, increasing the potential for compliance issues, security breaches, and financial loss.

Shadow IT has long been a challenge for organizations, with employees using unauthorized software or SaaS applications to improve productivity. This often includes file-sharing platforms, collaboration tools, and personal cloud storage solutions that bypass IT security protocols.

Many companies have implemented SaaS Management tools and access controls to mitigate the risks of Shadow IT. However, as more enterprise software integrates AI, traditional IT oversight strategies may no longer be sufficient to address the growing challenge of AI-driven tools entering the workplace.

Shadow AI takes the risks of shadow IT further by introducing self-learning models, generative AI, and predictive analytics that can operate outside IT’s control. Employees may use AI-powered assistants, automation tools, or external AI APIs to process company data, often without understanding how data is stored, shared, or used by these models.

Unlike unauthorized SaaS tools, which can often be detected through network activity, shadow AI may exist within approved platforms or be embedded within internal workflows. As AI capabilities expand, companies must develop governance models that specifically address unregulated AI adoption, ethical risks, and compliance challenges.

As shadow AI adoption increases, organizations must consider the broader risks and business implications. From security concerns to compliance challenges, the lack of AI oversight can result in significant financial, legal, and reputational damage.

Shadow AI introduces serious cybersecurity risks, as employees may unknowingly input sensitive data into AI models that store or process information externally. Without IT controls, AI-generated outputs may also be used in critical business decisions without proper validation.

The 2025 SaaS Management Index report found that 93% of IT leaders have varying concerns about the data security risks associated with AI tools. These risks include data leaks, unauthorized access, and vulnerabilities in third-party AI platforms. Companies must establish AI security policies to prevent employees from exposing proprietary information through unmanaged AI applications.

AI models that process and store corporate data may violate industry regulations such as GDPR, HIPAA, and SOC 2, particularly when data handling policies are unclear. Shadow AI can result in unintentional compliance breaches, as companies struggle to track where data is being processed, stored, or used in AI workflows.

Organizations operating in highly-regulated industries face additional risks, as unapproved AI models may generate incorrect financial reports, biased hiring decisions, or unauthorized medical recommendations, leading to regulatory penalties and legal liabilities.

The financial impact of shadow AI extends beyond security risks, as uncontrolled AI adoption can drive up IT costs. Many organizations are investing heavily in AI-powered applications, with spending on AI-native applications rising 108% in 2025, averaging $1.2M in spend per organization.

AI adoption also introduces unpredictable costs due to consumption-based pricing models. Many SaaS vendors now charge based on usage, API calls, or AI-generated content, making it difficult for IT teams to track spending.

Without centralized visibility into AI-driven SaaS spending, organizations risk overspending on AI-powered features that may not be fully utilized or necessary for business operations.

Uncontrolled AI use can impact an organization’s reputation, especially when AI-generated content, recommendations, or decisions are made without oversight. AI tools can produce biased hiring decisions, inaccurate financial insights, or misleading marketing content, damaging brand credibility. If customers or stakeholders discover that a company is using unregulated AI tools that compromise security or privacy, it can lead to loss of trust, negative media coverage, and legal challenges.

AI models, particularly generative AI tools, can produce misleading, inaccurate, or biased content. Employees relying on AI-generated insights without verifying their accuracy may introduce errors into financial reporting, marketing strategies, or customer service interactions.

Bias in AI models is another growing concern, as algorithms trained on incomplete or skewed datasets can reinforce discriminatory patterns. Without proper AI governance, organizations risk deploying biased AI models that result in legal and ethical complications.

To effectively control shadow AI, organizations must take a proactive approach that includes leadership buy-in, policy development, employee education, and continuous oversight. Without proper governance, AI tools can introduce security risks, compliance violations, and unexpected financial burdens. The following strategies help businesses manage shadow AI while still enabling innovation and productivity.

Executive leadership plays a critical role in AI governance. Yet, many organizations struggle to communicate the full scope of risks associated with shadow AI. IT teams must engage C-suite leaders and department heads to ensure they understand how unmanaged AI adoption can impact data security, compliance, and operational efficiency.

Shadow AI governance requires more than just IT intervention—it demands cross-functional collaboration from legal, finance, compliance, and HR teams. By ensuring leadership is aligned on the risks and responsibilities associated with AI, organizations can develop a more structured, strategic approach to AI oversight.

AI adoption is accelerating, and IT leaders recognize the need for formal governance. According to the 2025 SaaS Management Index, 81.8% of IT leaders have documented policies specifically governing AI tools. However, the effectiveness of these policies depends on consistent enforcement, regular updates, and employee awareness.

Organizations should create clear guidelines outlining how AI tools can be used, which tools are approved, and how data should be handled. Governance models should include risk assessments, procurement approvals, and compliance reviews to ensure AI adoption aligns with security best practices.

Many employees use AI-powered tools without realizing the risks involved or whether their usage aligns with company policies. IT teams must develop clear communication strategies to ensure employees understand:

By keeping employees informed, organizations can reduce the likelihood of unintentional security breaches or compliance violations tied to unauthorized AI tools.

Rather than banning AI tools outright, companies should define when and how employees can leverage AI for their work. A structured approach includes:

By prioritizing risk management rather than complete restriction, organizations can encourage responsible AI adoption without stifling innovation.

Shadow AI governance should not be limited to IT teams—it requires collaboration between security, legal, finance, HR, and business unit leaders. Cross-functional teams can help assess AI risks, establish best practices, and monitor AI adoption trends across the organization.

By integrating AI governance into existing risk management frameworks, businesses can develop a unified approach that balances innovation with security and compliance.

Employees need proper training to ensure they understand AI’s limitations, biases, and security implications. AI literacy programs should educate employees on:

Regular training ensures that employees remain aware of evolving AI risks and best practices, reducing shadow AI exposure across the organization.

AI technology evolves rapidly, making static governance policies ineffective. Organizations should regularly review and update AI policies to account for:

By keeping policies agile and adaptive, organizations can stay ahead of AI-driven risks rather than reacting to them.

Many AI-powered features are introduced through software updates, often without IT teams being fully aware. Organizations should establish a process for reviewing AI functionality in SaaS platforms to prevent:

Regular monitoring and full visibility ensures that AI capabilities are properly assessed and aligned with business needs before they become unregulated shadow AI risks.

Open communication with your SaaS vendors is also key to understanding the risks their AI tools may introduce to your business. Learn what questions to ask your vendors about AI in the short video below.

Once an organization identifies shadow AI within its environment, the next step is to assess its impact, mitigate risks, and establish governance processes to prevent further unapproved AI use. Addressing shadow AI requires visibility, collaboration, and structured policy enforcement.

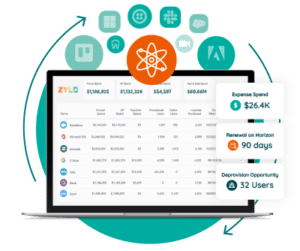

Assess the extent of AI usage: Identify which AI tools are in use, who is using them, and whether they integrate with existing enterprise systems. This can be done through SaaS discovery tools (like Zylo), expense tracking, and security audits.

Assess the extent of AI usage: Identify which AI tools are in use, who is using them, and whether they integrate with existing enterprise systems. This can be done through SaaS discovery tools (like Zylo), expense tracking, and security audits.With this approach, organizations can transition from reactive AI discovery to proactive AI management, ensuring that AI-powered innovation happens within a secure, compliant framework.

Effectively managing shadow AI requires more than just detection. It involves ongoing monitoring, employee education, and governance updates to keep AI adoption aligned with business needs and security requirements. Here are a few strategic best practices you should follow.

By following these best practices, organizations can reduce the risks of Shadow AI while enabling employees to leverage AI-powered tools effectively and securely.

Managing shadow AI requires clear governance, proactive monitoring, and enterprise-wide awareness. Zylo provides visibility into SaaS applications, including AI-powered tools, helping organizations track AI adoption, manage risks, and optimize spending.

Want to learn more about AI? Download the 2025 SaaS Management Index.

Shadow AI refers to the use of artificial intelligence tools within an organization without IT oversight or formal governance. Employees may adopt AI-powered applications for automation, data analysis, or content generation, often without understanding the security or compliance risks.

Shadow AI emerges due to the accessibility of AI tools, decentralized purchasing, and the rapid adoption of AI-powered SaaS applications. Many employees use AI without IT approval, either through existing software integrations or standalone AI platforms.

Unregulated AI adoption can introduce security vulnerabilities, compliance violations, financial risks, and reputational damage. AI tools may expose sensitive data, generate biased or misleading outputs, or result in unexpected SaaS costs due to consumption-based pricing models.

AI adoption is growing rapidly, with employees and business units expensing AI-powered tools at increasing rates. Without visibility into AI usage, organizations risk data exposure, operational inefficiencies, and compliance challenges.

Shadow AI removes IT oversight from AI-driven decision-making, security monitoring, and cost management. Without governance, organizations cannot assess how AI tools handle data, whether they comply with regulations, or if they introduce operational risks.

Traditional AI adoption follows structured implementation processes, with IT teams ensuring security, compliance, and integration with existing systems. Shadow AI, on the other hand, enters the organization unmanaged, often through individual employees or business units.

Yes, shadow AI can lead to data privacy violations, regulatory noncompliance, and biased decision making. Many AI tools store and process data externally, potentially conflicting with industry regulations like GDPR or HIPAA. Ethical concerns arise when AI-generated outputs lack transparency or introduce discriminatory biases.

Organizations should establish AI governance policies, monitor AI adoption, educate employees on AI risks, and integrate AI oversight into security and compliance frameworks. Regular audits and SaaS Management tools can help detect unauthorized AI use.

Table of Contents Toggle3 Key AI Risks for SaaS ManagementRisk #1:...

Table of Contents ToggleEpisode SummaryGuest SpotlightEpisode HighlightsThere are Inherent Risks with...

Table of Contents ToggleWhat Is Shadow AI?What Causes Shadow AI?Accessibility of...

Table of Contents ToggleWhat Is Shadow AI?What Causes Shadow AI?Accessibility of...

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |